HH Pegasus Helper

BullMQ

Engineering: https://pegasus-helper.hh-engineering.my.id/bullmq

User: user1

Password: 1234

Production: Coming soon

Clear Cloudflare Cache

When there is a restaurant or package change, the Cloudflare cache must be cleared to reflect these changes on the website. This process involves sending an event to Kafka, which instructs `hh-pegasus-helper` to clear the cache.

Process Overview

1. Event Trigger: A change in the restaurant/package triggers an event.

2. Kafka Event: The event is sent to Kafka.

3. Cache Clearing: hh-pegasus-helper receives the event and adds it to the BullMQ queue.

4. Execution: The cache clearing is executed by a BullMQ worker.

Monitoring

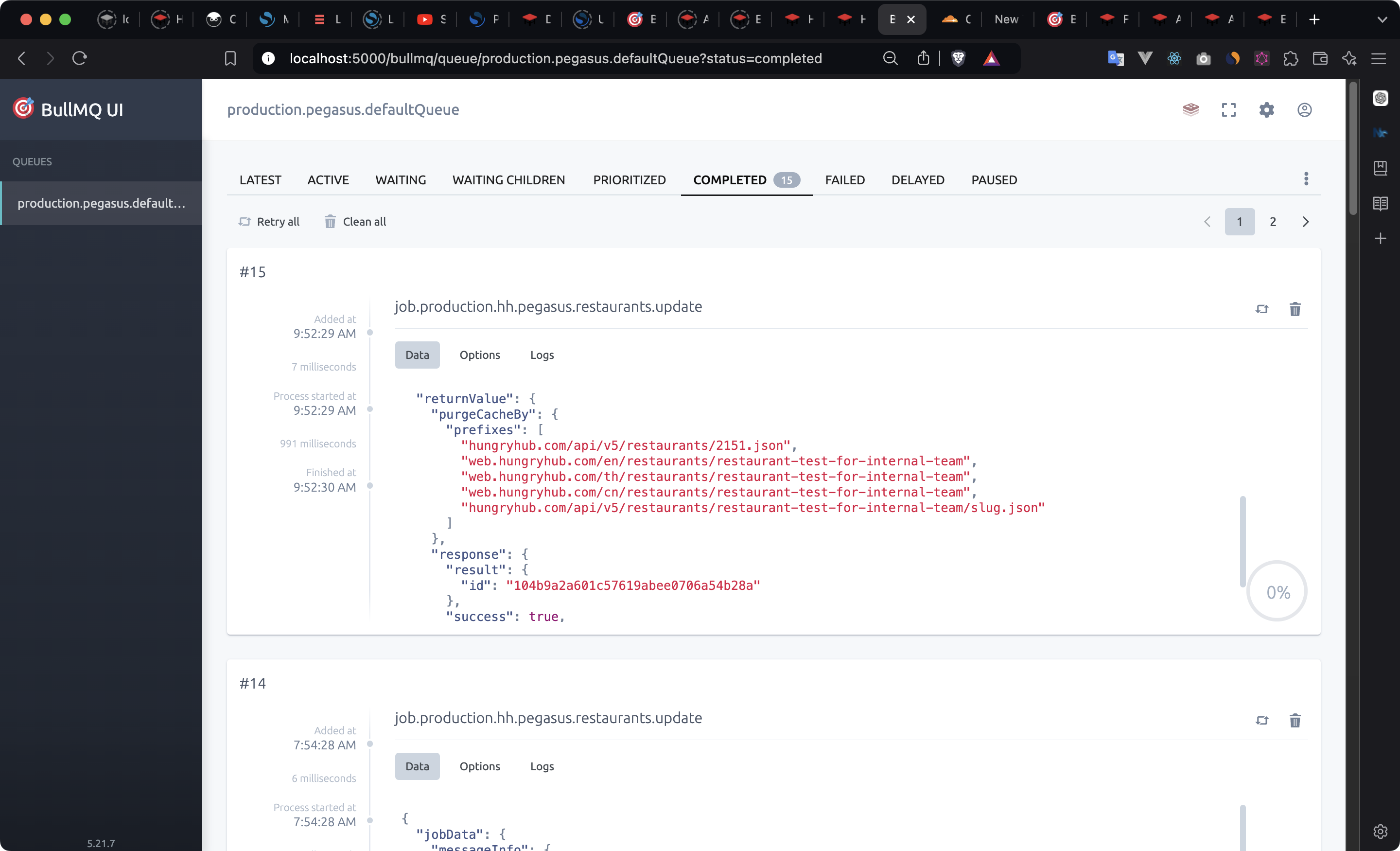

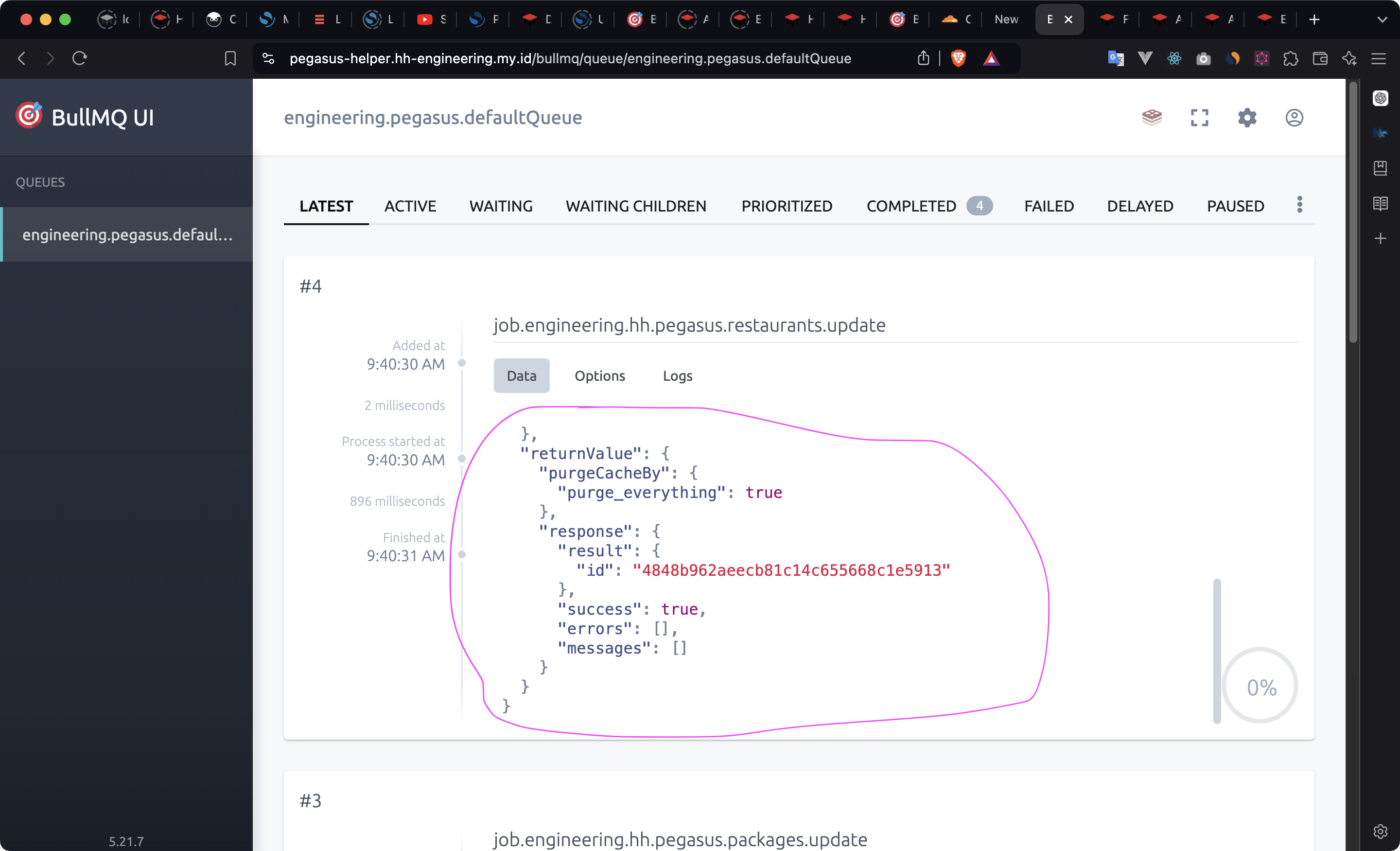

You can monitor the status of the cache clearing process on the BullMQ dashboard.

Production:

Staging:

Caveat (Need BE Support)

Issue 1:

When a package is changed, the cache is not purged for the following API endpoint:

GET /api/v5/restaurant_packages.json?locale=en&restaurant_id=2151

This is because the query string cannot be used to purge the cache. Purging by prefix would result in purging all caches with the same prefix /api/v5/restaurant_packages.json, which is not desirable.

Proposed Solution

To address this issue, the backend can add tags to the Cache-Tag HTTP response header from the origin web server. This will enable cache purging by tags.

Example:

Cache-Tag:package_1,package_2,package_3

For more information, refer to the Cloudflare documentation on purging cache by tags.

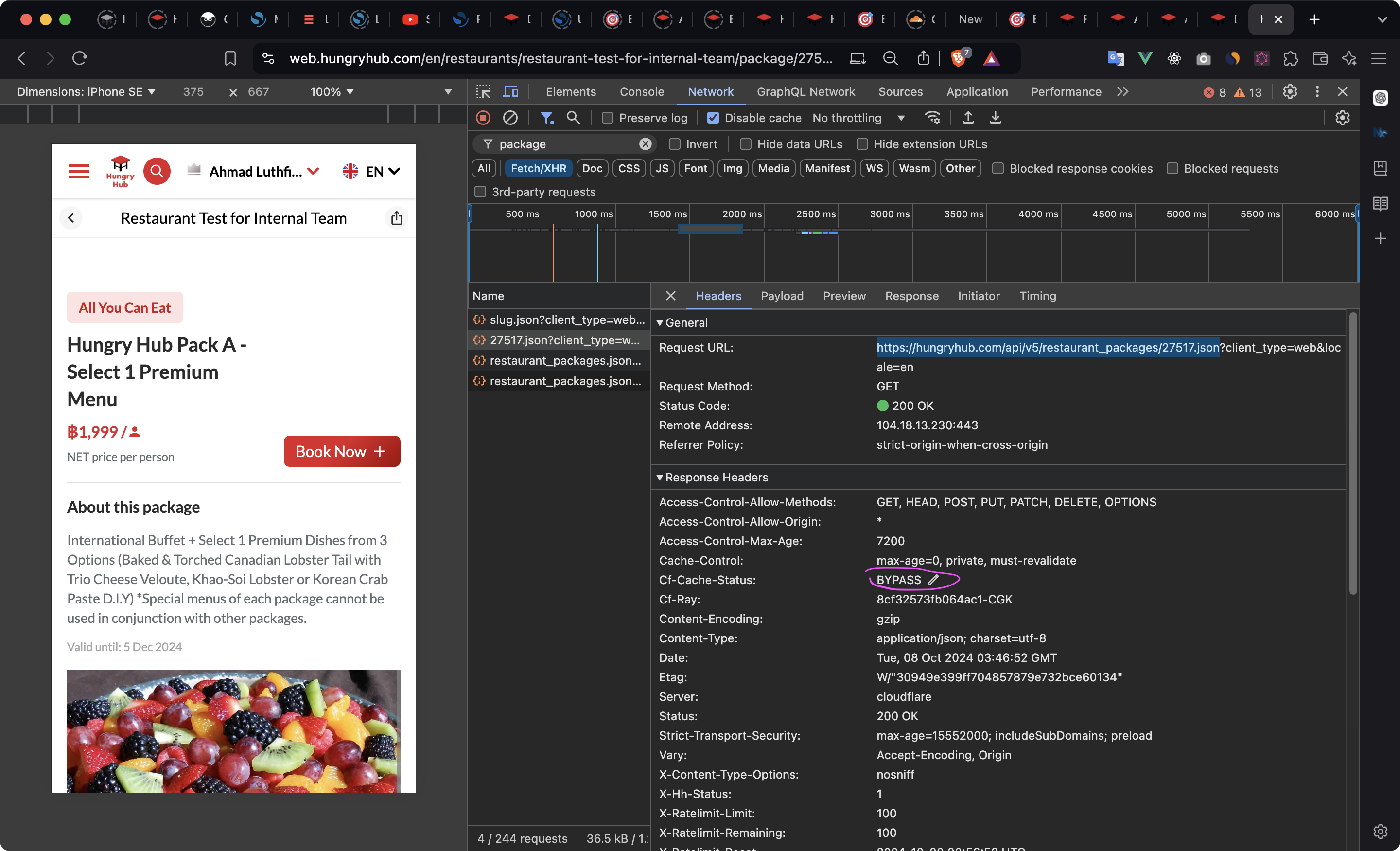

Issue 2

The API https://hungryhub.com/api/v5/restaurant_packages/27517.json is always being bypassed by Cloudflare. This might be due to the Cache-Control header settings: Cache-Control: max-age=0, private, must-revalidate.

Is this behavior expected?

Production Deployment

Branch: main Env:

apiVersion: v1

kind: ConfigMap

metadata:

name: hh-pegasus-helper-config

data:

NODE_ENV: production

FASTIFY_PORT: '5000'

FASTIFY_HOST: '0.0.0.0'

REDIS_HOST: <same as hh-search production>

REDIS_PASSWORD: <same as hh-search production>

REDIS_PORT: <same as hh-search production>

REDIS_TLS: 'false'

KAFKA_BROKER_LIST: kafka-hh-production.a.aivencloud.com:25942

KAFKA_SECURITY_PROTOCOL: ssl

KAFKA_GROUP_ID: hh-pegasus-helper-group-id

ROLLBAR_ACCESS_TOKEN: 9927e741c87641bda3f8cd42f2534ef8

ROLLBAR_ENABLED: 'true'

WEB_DOMAIN: web.hungryhub.com

API_DOMAIN: hungryhub.com/api/v5

CLOUDFLARE_API: https://api.cloudflare.com/client/v4

CLOUDFLARE_TOKEN: x3d5TBPsq7bYBjWpEeBhPguhjXbR4XcARUHOQ-x2

CLOUDFLARE_ZONE_ID: 104b9a2a601c57619abee0706a54b28a

I think for Redis can use the same server as hh-search, what do you think?